There have been some interesting developments in the discussions about the future of Tesla’s Full Self-Driving software. Elon has recently pronounced that FSD software will be dramatically better by the end of the year, and there is a lot of buzz about the possibility of Tesla’s neural networks moving toward an End-to-End model.

What has Elon been saying?

xAI.org Twitter Space: End-to-End

On July 14th, 2023, Elon hosted a Twitter space discussing his latest company/endeavor x.AI with a mission “to understand the true nature of the universe.” During that call, while discussing AI stuff, he mentioned that in general people are trying to “brute force” AGI (Artificial General Intelligence) and not succeeding. And went on to say:

“So if I look at the experience with Tesla, what we’re discovering over time is that we actually overcomplicated the problem. I can’t speak in too much detail about what Tesla has figured out but except to say that in broad terms the answer was much simpler than we thought. We were too dumb to realize how simple the answer was.”

Elon Musk, Twitter Space from x.AI, 2023-07-14, timestamp 44:06

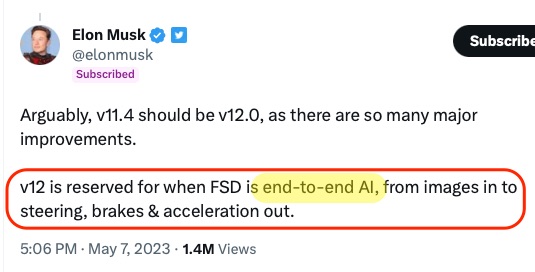

Tweets of interest:

From back on May 7:

Elon proclaims “Full Self-Driving” by the end of the year

A lot of Elon’s recent proclamations were summed up well by Electrek in this article. I’m trying to be unbiased here. Elon’s comments are generally vague and interpreted in many different ways, but have very often been over-optimistic ESPECIALLY regarding the progress of autonomous driving. See this timeline for links to a decade of optimistic declarations.

Discussion

Many people have taken all this to mean that Tesla is moving their FSD neural nets toward an End-to-End design. In the current driving self-driving paradigm, there are multiple layers. To explain, I will totally oversimplify the self-driving car system into the following 3 broad layers. See references for more details.

- Data Capture: The 8 cameras create a movie.

- Feature Detection: The movie is analyzed to find features: cars, lanes, stop lights, pedestrians, etc that represent the dynamic state of the world.

- Planning & Execution: Then higher-level processing is applied to this worldview and used to determine how to drive (direction and speed) to achieve the current navigation goal.

Originally, it has been assumed that the neural networks were used in step 2, Feature Detection. The training of the neural network to complete this task is a difficult and time-consuming process. The third step, Planning & Execution, was assumed to be more traditional programming. Although, Tesla has recently stated that they were moving more and more of this logic into neural networks as well.

In an End-to-End model, you remove the second layer. So the inputs are the navigation goal and the movie. The outputs are the driving instructions. Yes, this is a pretty complex and impressive computer, but it also is possibly a closer representation of how humans drive. We know where we want to go, look out into the world, and drive. Yes, we humans can do feature detection as well, but that is not necessarily how we drive. We just go.

Impact 1: Delays!!

A complete re-architecting of the FSD coding is more likely to be a timing setback than a jump forward. This is just how coding goes and seems even more true when based on Neural Networks.

Impact 2: More uncertainty in the eventual ability

Neural networks are notoriously difficult to refine on a continuous improvement path. What worked previously (for example smooth turning) is not guaranteed to still work when the neural network is retrained.

Let’s say there are 100 complex test cases for the self-driving codebase. (In actuality, I hope there are millions of base test cases.) And each of those has a variety of parameters that make the actual number of possible tests effectively infinite. Right now perhaps FSD can pass 60% perfectly and another 20% they can complete but needs improvement. The last 20% are failing.

With the end-to-end model, the system becomes a total black box. There is no way to look in and see where the problems are. (Search for “Mechanistic Interpretability” for more information.) If neural networks are just being used for feature detection, then a failure in identifying occluded stop signs (for example) can be linked back to the specific issues for more work.

Another concept you hear about these days is “Constitutional AI” which is the idea of having your AI system also adhere to a set of values that would be stated. (see Techcrunch article). There is probably a parallel idea that could be used for autonomous cars. A “governor” of sorts that try would oversee the driving to keep the system running within some well-defined parameters. (Yep, a lot more to say about this teaser…)

Biggest Red Flag of All

At the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Ashok Elluswamy (Director – Autopilot Software at Tesla) gave a talk (see YouTube below). A whole section was about the difficulty in detecting and modeling road lanes. I agree that lanes are extremely complex to model and predict in the real world (5:33) in real-time. He goes on to talk about creating training data and being able to auto-label features. This is all amazing stuff!

The end goal of these development efforts is to enable Tesla FSD to drive on any street because the computer has little reliance on any map metadata. My perspective is that this would be great if it was possible. Telsa FSD has yet to show that this is possible. And, I do not see a trajectory that indicates it will ever be possible in the near future. And, I know there are cases where they had problems (see O’Dowd, Gerber stop sign fiasco) that they solved without a software update, so it must have been with adding map metadata.

I believe that the current FSD has the best demonstrations in areas that have the most complete map metadata like San Francisco.

Why not use the fleet to manage and continuously enhance the map metadata? Yes, roads and lanes get updated, but they are largely STATIC, and with millions of cars driving it seems possible to build and maintain this information. The mapping metadata could be constantly checked against the camera data. Updates could be swift. The drive system could attempt to drive when outdated metadata is detected. It only needs to recognize if it is capable of doing the drive or not. And when it can’t drive, just turn over to the human.

Of course, map data is required to do basic routing. I’m not thinking so much about relying on map metadata (speed limits, lane definition, stop signs, parking habits, congestion patterns, etc.), but more about making it a priority. Continually collecting and refining and using it as much as possible to enhance autonomous driving. Humans can read signs and interpret clues (construction items, flaggers, driving patterns) better than the car can today. Yes, the software and hardware will get better, and can possibly outperform humans in some areas, 360-degree attentiveness being the biggest. But, overall, I feel that in other areas humans will remain the “gold standard” for a long time.

Tesla seems to be more focused on fully autonomous driving without using metadata. It seems to me that their cars drive much better where better metadata is available and being used. Many people state that this “locational difference in ability” is because the neural nets have been trained from data in those areas, which I think makes sense, but I believe it to be less important in explaining this difference. Having and using map metadata seems like such a powerful technique for enhancing FSD driving ability.

As an example of the value of map metadata, when you first drive in a new area, it can be confusing. This would be like a self-driving car not using metadata. For example, I know when driving south on Ridge/Marburg to get in the right lane as I approach Brotherton Road because that lane backs for left-turning traffic. Then, I know to immediately get back to the left lane because there are often parked cars in the right lane prior to reaching Paxton Ave. Cars without this knowledge tend to get stuck in bad situations and need to make often difficult and potentially dangerous maneuvers to continue on.

For a self-driving car, there are infinite examples of how this can benefit driving. Another easy example is coming to a 4-way stop in a residential area. Tesla FSD cannot currently determine by sight if the crossing traffic also has a stop sign. It does not read “4-way stop” text, and cannot interpret seeing the back sides of the cross traffics stop signs. Perhaps someday it will get better at both of these items, but it will be a very long time until it is as good as a human. So in the meanwhile, FSD approaches the stop not knowing if the crossing traffic has to stop or not (unless it is already using this metadata). Therefore, it waits, it creeps until it thinks it can see far enough to go safely. A painfully slow process. But, other Teslas may have driven on the cross street and seen the stop signs. This information can be passed on to the collective and out to the fleet, so all cars now know that this is a 4-way stop.

This does not mean though that this is the end of data collection for the intersection. Tesla can continuously evaluate how cars are moving through the intersection (under FSD or not). Also, if it does detect crossing traffic approaching the intersection at an unexpectedly high speed, it would know to have additional caution. Or perhaps it finds cars braking hard at this intersection, etc. This data can be collected and feedback into further updates. Perhaps, 1) the stop sign has been removed and needs to be re-assessed, or 2) cars often run this stop sign and this should be accounted for in their driving.

For the Tesla universe, they can collect and disseminate this data, so all cars can benefit from the fleet. I envision that this would at first be slow feedback with monthly/weekly updates. But the cycle can be automated and eventually be near instantaneous. Like Waze/Google real-time traffic data on steroids.

Final Words

In the Tesla self-driving world, there seems to be this overarching idea that they will go from where they are today directly to a fully autonomous car. I think this is crazy. I tried to formulate some of my thoughts around this recently in a previous post. In that post, I did not explain this driving enhancement based on map metadata in any detail, but it is a big part of my thinking. You can see it here: Tesla FSD Milstones. And have posted a bit on Twitter about it as well. My Twitter Blue account is https://twitter.com/fsd_now if you’d like to follow along.

FSD must crawl before it can walk. And walk before it can run. There will be a reasonable progression in autonomous driving. It will not magically show up one day. I have suggested a possible progression in this Tesla FSD Milestones posting.

I do not see Tesla FSD or any other software being able to handle autonomous driving without this more complete map metadata dependency. Now, I just need to wait for Tesla to realize this…

References and Further Reading

- Great video explaining “End-to-End” neural networks, by Stanford instructor Andrew Ng: What is End-to-end Deep Learning?

- Research Paper from 2016: End-to-End Learning for Self-Driving Cars, arXiv:1604.07316 [cs.CV]

We trained a convolutional neural network (CNN) to map raw pixels from a single front-facing camera directly to steering commands. This end-to-end approach proved surprisingly powerful. With minimum training data from humans the system learns to drive in traffic on local roads with or without lane markings and on highways. It also operates in areas with unclear visual guidance such as in parking lots and on unpaved roads.

- 2023-07-21 Podcast on NY Times Hard Fork

- Dario Amodei, the chief executive of Anthropic, is interviewed about the dangers of AI. Among other things, they discuss the inability of neural networks to explain themselves. This gets directly to my point that having driving autonomy be “all-or-nothing” is going to be very hard to improve in a continuous manner.

Towards Data Science article: End-to-end learning, the (almost) every purpose ML method includes the limitations listed below that make end-to-end “an infeasible option” in different cases:

- [CVPR’23 WAD] Keynote – Ashok Elluswamy, Tesla, Jun 29, 2023